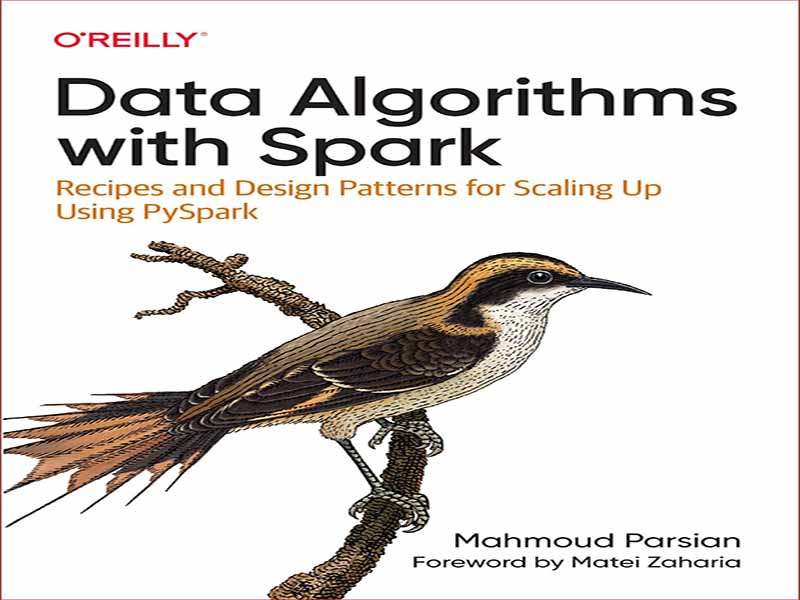

دانلود کتاب الگوریتمهای داده با دستورالعملهای Spark و الگوهای طراحی برای افزایش مقیاس با استفاده از PySpark

نویسنده:

مجله بیبیس

مشاهده بیشتر

- دانلود کتاب تجزیه و تحلیل داده ها و بصری سازی

- دانلود کتاب تجزیه و تحلیل داده های پیشرفته با استفاده از پایتون

- دانلود کتاب مقدمه ای بر بهینه سازی با برنامه های کاربردی در یادگیری ماشین و تجزیه و تحلیل داده ها

- دانلود کتاب ضروریات تجزیه و تحلیل داده ها

- دانلود کتاب تجزیه و تحلیل داده های آموزشی برای معلمان و مدیران مدارس

- دانلود کتاب عملی SQL – راهنمای مبتدیان برای داستان سرایی با داده ها

- دانلود کتاب علم داده و تجزیه و تحلیل داده های بزرگ – کشف، تجزیه و تحلیل، بصری سازی و ارائه داده ها

- دانلود کتاب تجزیه و تحلیل داده ها – مفاهیم، تکنیک ها و کاربردها

- دانلود کتاب مقدمه ای کلی بر تجزیه و تحلیل داده ها

- دانلود کتاب عملی SQL – راهنمای مبتدیان برای داستان سرایی با داده ها

- دانلود کتاب مدلهای رگرسیون برای دادههای دستهبندی و تعداد

- دانلود کتاب رگرسیون خطی اعمال شده برای داده های طولی

- دانلود کتاب داده های بهداشتی عملی – رام کردن پیچیدگی داده های دنیای واقعی

- دانلود کتاب داده ها برای خیر اجتماعی – پروژه های دادههای بخش غیر انتفاعی

- دانلود کتاب تجزیه و تحلیل داده ها برای کسب و کار

- دانلود کتاب برنامه های کاربردی تجزیه و تحلیل داده ها در بازارهای نوظهور

- دانلود کتاب تجزیه و تحلیل داده های بزرگ با Spark

- دانلود کتاب یادگیری Spark

- دانلود کتاب Spark برای توسعه دهندگان پایتون

- دانلود کتاب علم داده کاربردی با استفاده از PySpark

- دانلود کتاب تجزیه و تحلیل آماری با اکسل برای مبتدیان

- دانلود کتاب مقیاس پذیری یادگیری ماشین با Spark

- دانلود کتاب تجزیه و تحلیل در دنیای داده های بزرگ

- دانلود کتاب روش های محاسباتی برای تجزیه و تحلیل داده ها

- دانلود کتاب روش های تجزیه و تحلیل داده های بزرگ

- دانلود کتاب تجزیه و تحلیل داده ها – مدل ها و الگوریتم ها برای تجزیه و تحلیل هوشمند داده ها

- دانلود کتاب SQL برای تجزیه و تحلیل داده ها

- دانلود کتاب جعبه ابزار تجزیه و تحلیل متن – MATLAB

- دانلود کتاب شروع آپاچی اسپارک 3 با DataFrame، Spark SQL، استریم ساخت یافته و کتابخانه های بادگیری ماشین

- دانلود کتاب دنیای تجارت با داده ها و تجزیه و تحلیل ها

- دانلود کتاب Julia برای تجزیه و تحلیل داده ها

- دانلود کتاب تجزیه و تحلیل داده های بزرگ را با Amazon EMR ساده کنید

- دانلود کتاب تجزیه و تحلیل داده های سری زمانی در اقیانوس شناسی

- دانلود کتاب روش های عددی در تجزیه و تحلیل داده های محیطی

- دانلود کتاب تجزیه و تحلیل داده ها برای حسابداری

- دانلود کتاب پردازش کلان داده با استفاده از Spark در ابر

- دانلود کتاب مهندسی مشاهده پذیری

- دانلود کتاب تجزیه و تحلیل بقا

- دانلود کتاب از مشاهدات تا درختان فیلوژنتیک بهینه – تجزیه و تحلیل فیلوژنتیک داده های ریخت شناسی، جلد-1

- دانلود کتاب بررسی داده با Google Data Studio – راهنمای عملی برای استفاده از Data Studio برای ایجاد جذابیت

- دانلود کتاب علم داده و تجزیه و تحلیل برای SMEها

- دانلود کتاب مبانی مهندسی داده – برنامه ریزی و ساخت سیستم های داده قوی

- دانلود کتاب بحث عملی داده پایتون و کیفیت داده – خواندن، تمیز کردن و تجزیه و تحلیل داده ها

- دانلود کتاب مدیریت هوشمند مهندسی بهداشت و درمان و تجزیه و تحلیل ریسک

- دانلود کتاب آمار و تجزیه و تحلیل داده های علمی

- دانلود کتاب یادگیری ماشین از تئوری تا کاربرد

- دانلود کتاب یادگیری ماشین برای دانشمندان کامپیوتر و تحلیلگران داده از دیدگاه کاربردی

- دانلود کتاب بصری سازی داده ها با پایتون و جاوا اسکریپت

- دانلود کتاب مهندسی داده با Alteryx – اعمال شیوههای DataOps با Alteryx

- دانلود کتاب استراتژی تجزیه و تحلیل داده برای تجارت

نظرات کاربران